Revolutionize Prompt Engineering with

Next-Gen LLM Evaluation

Promptkey seamlessly generates, evaluates, and optimizes prompts across multiple LLMs with powerful datasets, real-time evaluation, and performance insights.

Comprehensive Features to Yield the Best Prompts and AI Performance

Comprehensive Features to Yield the Best Prompts and AI Performance

Comprehensive AI model assessment that combines cutting-edge technology with human expertise.

User-Centric Evaluations

Designed with end-users in mind, our platform provides nuanced insights that go beyond traditional metrics.

Subject Matter Expert Review

Leverage detailed assessments from industry experts with deep domain knowledge and technical expertise.

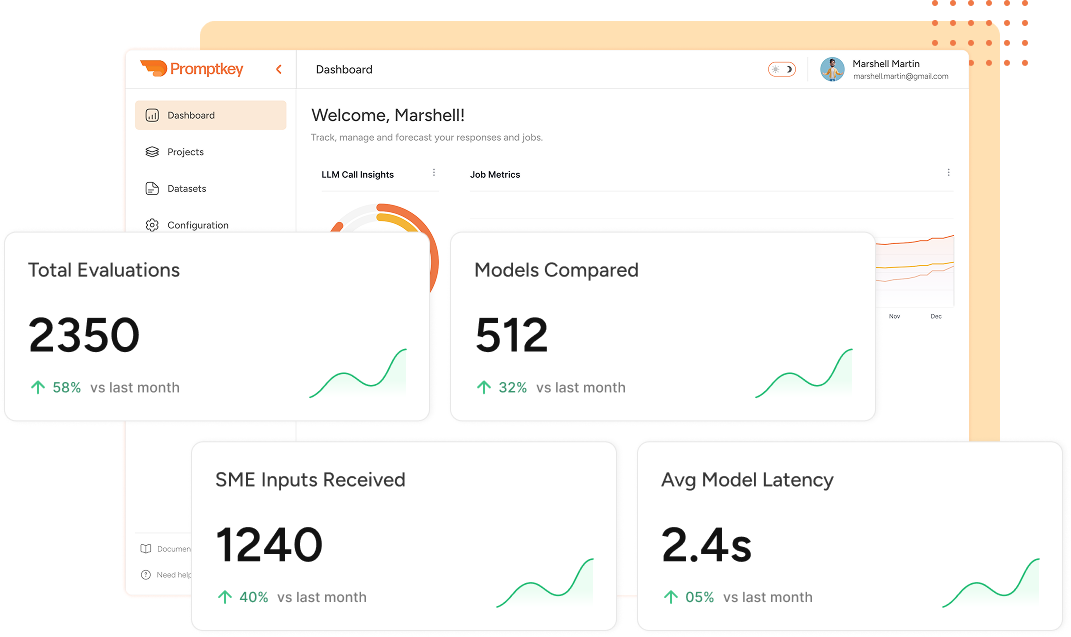

Moderation Dashboard

Gain real-time AI performance insights with our intuitive moderation dashboard. Track key metrics, monitor outputs, and ensure quality, compliance, and consistency.

Trusted by Leading Enterprises

Manage & Generate Your Datasets

Organize, curate, and generate high-quality datasets tailored to your evaluation needs. Effortlessly version datasets to track performance over time.

Manage & Generate Your Datasets

Organize, curate, and generate high-quality datasets tailored to your evaluation needs. Effortlessly version datasets to track performance over time.

Create Powerful Prompts Connected to Your Data

Design effective prompts that seamlessly integrate with your datasets, ensuring context-rich evaluations for consistent model performance.

Create Powerful Prompts Connected to Your Data

Design effective prompts that seamlessly integrate with your datasets, ensuring context-rich evaluations for consistent model performance.

Connect Multiple LLM with Flexible Configurations

Integrate multiple LLMs with ease. Experiment using various presets, fine-tuning parameters like temperature, output tokens, Top P, and frequency penalty—all in one interface.

Connect Multiple LLM with Flexible Configurations

Integrate multiple LLMs with ease. Experiment using various presets, fine-tuning parameters like temperature, output tokens, Top P, and frequency penalty—all in one interface.

Evaluate LLM Responses with SMEs

Leverage SME insights to assess model outputs accurately. Collaborate, score, and analyze responses to ensure data-driven evaluation at scale.

Evaluate LLM Responses with SMEs

Leverage SME insights to assess model outputs accurately. Collaborate, score, and analyze responses to ensure data-driven evaluation at scale.

The Right Way to

Evaluate Multiple LLM Responses

Streamline the entire evaluation lifecycle—from dataset management to expert-driven assessments—within a single, powerful platform.

- Manage & Generate Your Datasets

Organize, curate, and generate high-quality datasets tailored to your evaluation needs. Effortlessly version datasets to track performance over time.

- Create Powerful Prompts Connected to Your Data

- Connect Multiple LLM with Flexible Configurations

- Evaluate LLM Responses with SMEs

Comprehensive Support for

Models and Providers

State-of-the-Art

Response Comparison & Grading

Compare model outputs side by side with intuitive grading powered of User Centric Metrics.

Simple Steps to Evaluate Your Prompts

Evaluating your AI prompts is crucial for building high-performing and reliable models. A well crafted prompt is key to best output. Follow this simple steps to get started

Create your Project and Select a dataset

Start by creating your project, choosing the right dataset. A well-curated dataset ensures your prompts are evaluated against high-quality & relevant data, setting the foundation for best results.

Create or update your prompt

Design your prompt carefully to achieve the desired model behavior. Test different variations and refine your wording to optimize clarity, specificity, and effectiveness.

Select AI models to compare

Define evaluation parameters and involve SMEs

Set clear evaluation parameters such as accuracy, response time, and relevance. Involve Subject Matter Experts (SMEs) to provide qualitative feedback & ensure the model meets business expectations.

Run evaluations and analyze results with interactive dashboards.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Flexible Pricing Plans Tailored to Your Needs

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Basic

$0 / Month

- Limited evaluations, access to standard models.

- Limited evaluations, access to standard models.

- Limited evaluations, access to standard models.

- Limited evaluations, access to standard models.

Pro

$99 / Month

- Advanced evaluations, SME inputs, models, analytics.

- Advanced evaluations, SME inputs, models, analytics.

- Advanced evaluations, SME inputs, models, analytics.

- Advanced evaluations, SME inputs, models, analytics.

Enterprise

Custom Pricing

- API integrations, custom models, and priority support.

- API integrations, custom models, and priority support.

- API integrations, custom models, and priority support.

- API integrations, custom models, and priority support.

Evaluation Jobs

- Pay-as-you-go

- Response Comparison

- Custom Grading Parameters

- Dataset Management

- Manual Grading

- SME Grading

- Auto Evaluation Metrics

Pay-as-you-go

Jury Judge LLM

- Pay-as-you-go

- Response Comparison

- Custom Grading Parameters

- Dataset Management

- Comprehensive Reports

- Custom and Proprietary Metrics

- Actionable Recommendations

Pay-as-you-go

Frequently Asked Questions

- Identify datasets, models, and presets: Users select the datasets, models, and any existing presets for the evaluation. Custom grading parameters specific to the workload are configured.

- Understand presets: A preset is a model configuration that includes parameters like max_tokens, temperature, top_p, and top_k. These settings control the behavior and output quality of the model. Each model can have up to three presets, offering flexibility to test different configurations.

- Kick off the evaluation: Once everything is set, the user starts the evaluation process.

- Generate and store LLM responses: The system generates LLM responses based on the provided prompts and datasets, and the results are securely stored.

- User and expert grading: Users can review and grade the responses. Subject matter experts (SMEs) can also be invited to provide their evaluations.

- Full evaluation with LLM as a judge: Once human grading is complete, users can trigger a full evaluation where the LLM acts as a judge, using the human feedback to evaluate other records and ensure consistency.